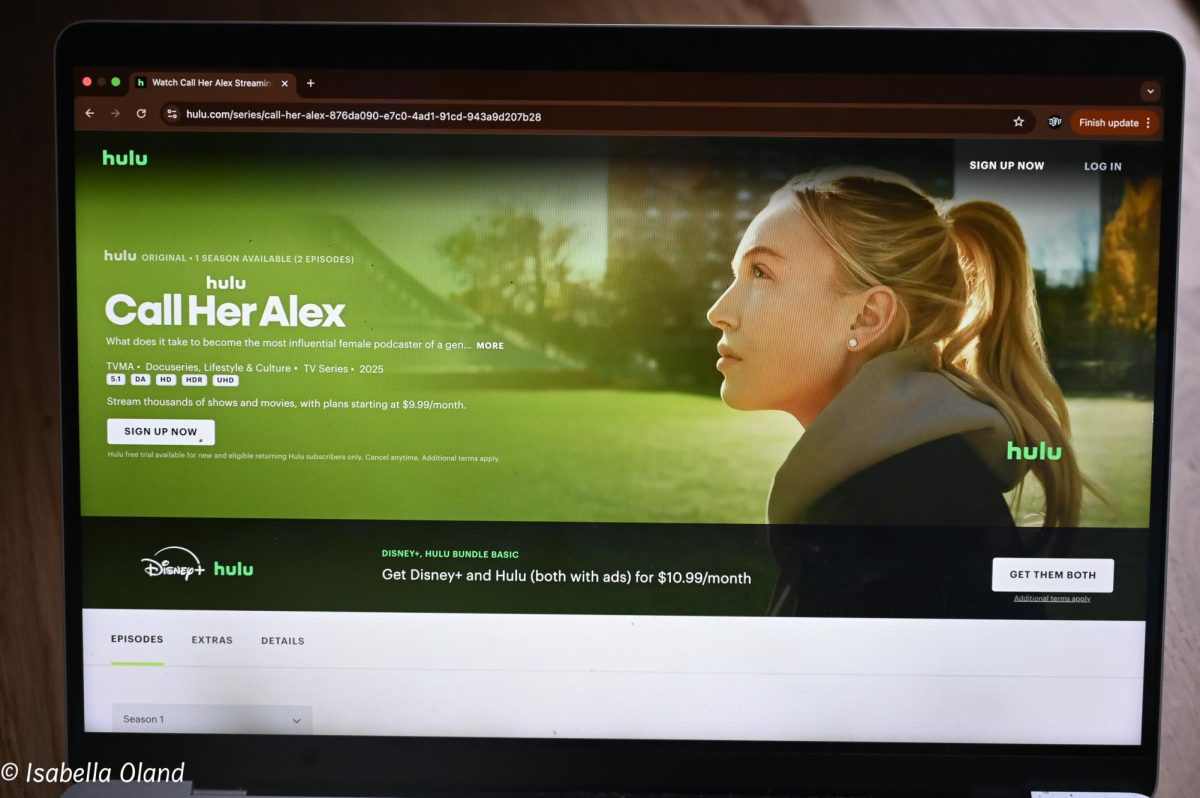

Picture this scenario: Charlie is a 17-year-old girl who has just been diagnosed with celiac disease, a condition that prevents her from eating gluten. An avid social media user, Charlie starts to follow foodies on Instagram who post gluten-free recipes.

Within a few days, Charlie notices that her Instagram feed is populated with influencers that have thin bodies, and she starts to feel uncomfortable in her own skin. She does not understand why but the more insecure she feels, the more Charlie scrolls through Instagram.

After a few more days, content that glorifies eating disorders appears.

Frances Haugen, a former Facebook product manager, explained how Instagram’s algorithms have been found to push teenagers into dark, unhealthy places during her testimony last month before the Senate Commerce subcommittee.

“Facebook knows its engagement ranking on Instagram can lead children from very innocuous topics like healthy recipes … to anorexic content over a very short period of time,” Haugen said during her testimony.

Internal research teams at Facebook knew that the company’s algorithms were harming young people’s mental health, according to Haugen. She added that even though researchers offered suggestions on how to make the platform safer, Facebook did not act on them.

“There is a pattern of behavior that I saw [at] Facebook: Facebook choosing to prioritize its profits over people,” said Haugen.

Researchers at Facebook found that 66% of teenage girls and 40% of teenage boys confront negative social comparisons while on Instagram. Their findings also show that 52% of teen girls experience negative social comparison as it relates to beauty standards, which can trigger downward spirals and body dysmorphia.

To expose these dangerous truths, Haugen handed over internal documents to media outlets, including The Wall Street Journal, which ran a multi-part series called “The Facebook Files.”

The former Facebook employee has helped others see how young people are prone to the addictive and damaging effects of social media platforms, similar to how young people are prone to tobacco usage. Congress now cites how Big Tech is having a Big Tobacco moment.

According to Haugen, Facebook understands the importance of drawing young people onto its platforms in order to expand and monetize. “They know that children bring their parents online,” she said.

In 2019, according to Haugen, Facebook redesigned its algorithm called downstream MSI, or Meaningful Social Interaction, which has caused a proliferation of anorexic-promoting content, hate speech and misinformation. When the algorithm predicts that a post will go viral, it spreads it to a wider audience, which can have deeply problematic effects.

Facebook’s internal staff has long known that limiting downstream MSI would minimize the propagation of harmful content and disinformation. Haugen theorized that perhaps it’s because viral posts are tied to bonuses that downstream MSI is still largely used today.

Some change has been enacted, though. In response to Haugen’s testimony, Facebook CEO Mark Zuckerberg decided to pause “Instagram Kids,” a platform made for children under the age of 13.

“I would be sincerely surprised if they do not continue working on Instagram kids,” said Haugen.

Similar to Big Tobacco, she said that Facebook wants to guarantee that the next generation is just as hooked on their product as the current one.

To mitigate a harmful cycle, Haugen asked that Congress step in and hold Zuckerburg and Big Tech accountable. Young people have been negatively impacted by harmful content for far too long, and it is time that Big Tech prioritizes people over profit.

“I believe in the potential of Facebook,” Haugen said. “We can do better.”

Despite the harm and hatred Facebook has caused, Haugen still believes in the power of social media in drawing people together. Her hope for kinder and healthier platforms is inspiring. Such hope is not ready-made, and it will take collective action to reform Big Tech.

When Zuckerburg founded Facebook in 2004, it is unlikely that he could have foreseen how much influence social media would eventually have over us. It is unlikely that he could have foreseen young girls, like Charlie, going onto his site and being pushed into anorexic behaviors. But Facebook’s own research indicates how harmful their algorithms are to young girls’ and boys’ mental health.

Haugen helped us understand just how severe the problem is by exposing Facebook’s internal documents and data. She showed us that when there is no government oversight or regulations, Big Tech companies can continue to prioritize profits over people.

Although companies will always be profit-driven — that is what companies are made to do — she shows us what can happen when that balance between monetization and public interest goes awry: Greed becomes a core pillar, and hurt becomes a byproduct.

Facebook knows better, and they can do better.