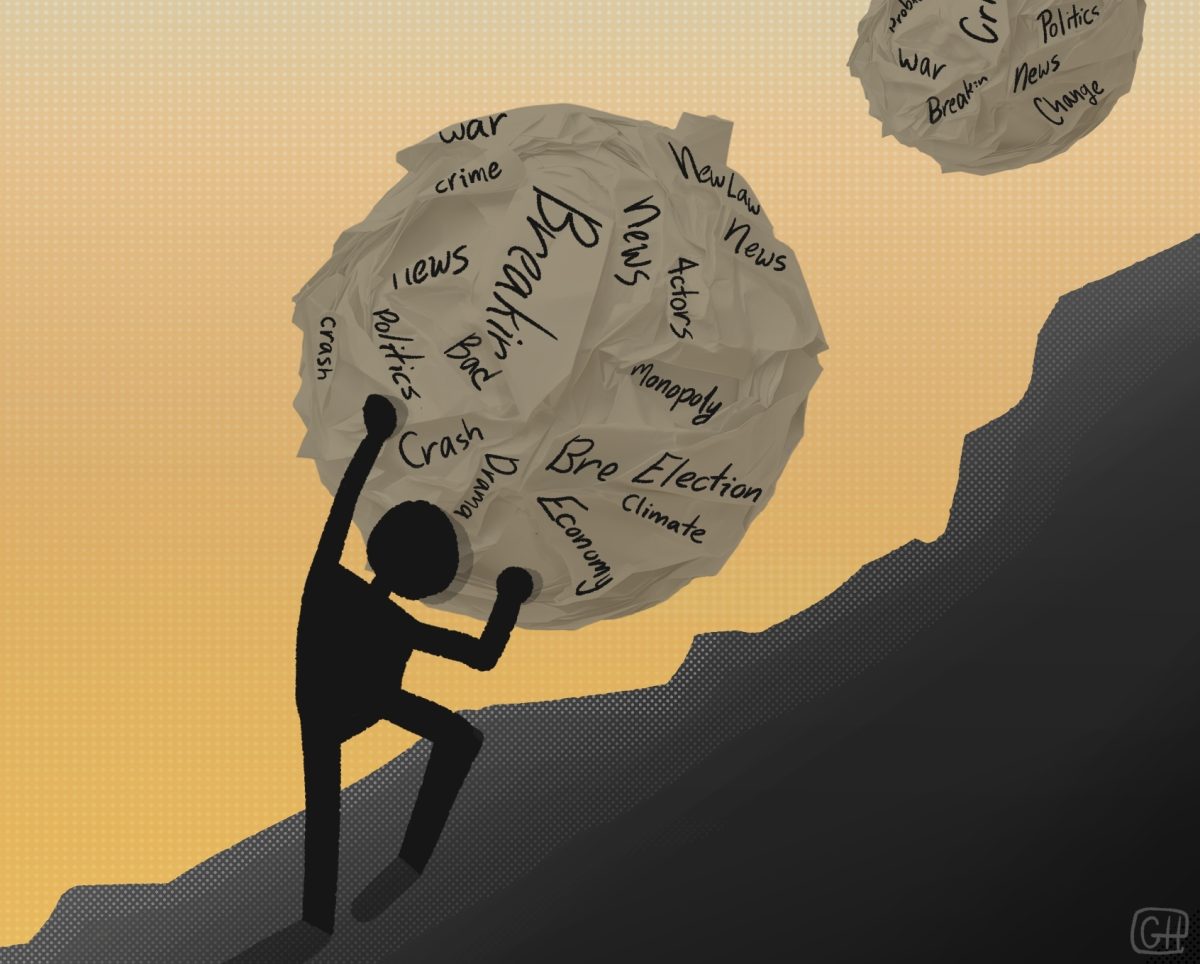

My attention to the United States’ upcoming presidential election has been admittedly Laodicean, as I find myself neither disinterested nor wholeheartedly enthusiastic about the remaining candidates and their platforms, or lack thereof. But I have noticed what I consider to be a concerning and perplexing apathy among Americans toward artificial intelligence governance.

Much of the world’s leading AI research occurs in the U.S., which puts American voters in a fortunate position to voice our opinions and influence its development. By extension, voters and legislators bear both the privilege and the responsibility of setting the standards — standards with global implications — on AI policy. At the same time, we must be careful not to blow our lead by exerting undue and untimely policy.

Although many experts are largely uncertain about exactly when artificial general intelligence will be fulfilled, the industry generally agrees that it may occur in the next few decades. Perhaps most troublingly, artificial superintelligence will follow very soon after AGI. When coupled with such short turnaround time, these changes will leave legislators scrambling to understand the demands of this new regulatory environment and produce worthy legislation for constituents.

For this reason, I’m concerned that voter apathy is setting us up for failure. Even worse, it relegates the tremendous responsibility of AI alignment to corporations and their unstandardized usage policies. Sound familiar?

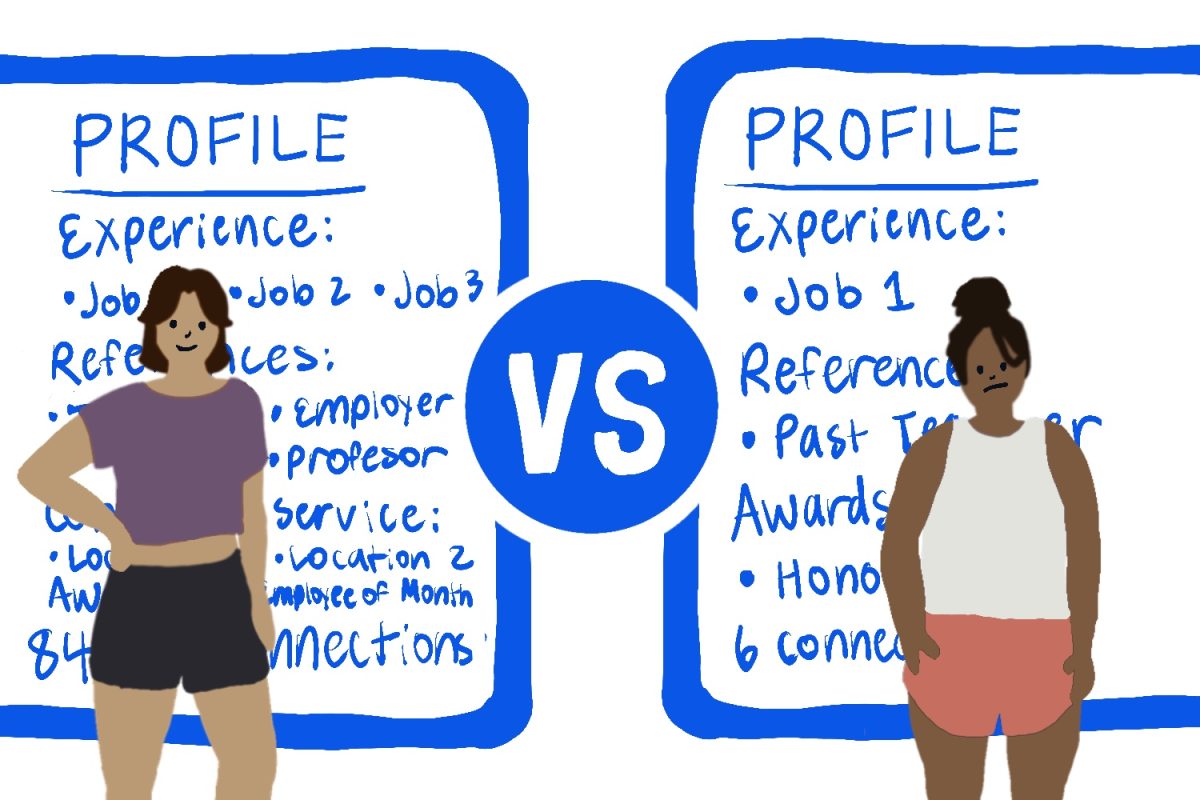

I hope it does. We’ve seen Google’s Project Nightingale covertly gather an estimated 50 million health records from healthcare provider Ascension without patient consent. We’ve seen as many as 87 million Facebook users have their data harvested and used for political profiling and political manipulation. My worry is that, with unstandardized AI development oversight, we will similarly allow model developers to become the arbiters of its use and the gatekeepers of how developers build.

The 2024 Presidential Election has highlighted AI in political discussions, though neither campaign has sufficiently addressed the unrealized existential risks and human-life impact if this technology goes awry. Vice President Kamala Harris has discussed an approach which tackles the “full spectrum” of AI risks. However, she mostly pays directionless lip service to a vague middle ground that holds companies accountable while avoiding overregulation which could stifle innovation.

Despite Harris’s efforts to address AI risks, her comments suggest a lack of depth in understanding the true nature of existential threats. As the EU moves forward with significant AI regulation, staying informed becomes paramount. The EU AI ACT brings forth new guidelines that impact how organizations develop and deploy artificial intelligence solutions. During a speech at the U.S. Embassy in London, she abuses the term to include personal hardships: “When a senior is kicked off his healthcare plan because of a faulty AI algorithm, is that not existential for him? When a woman is threatened by an abusive partner with explicit, deep-fake photographs, is that not existential for her? When a young father is wrongfully imprisoned because of biased AI facial recognition, is that not existential for his family?”

While I can agree these issues are indeed serious, conflating them with truly existential risks — which are typically terminal and transgenerational, such as engineered pandemics, nuclear warfare or totalitarianism — entirely misses the mark of runaway AI’s dangers. Harris’ failure to address the existential threats of AGI and ASI in her speech is a startling omission in her approach to AI policy.

In her speech, Harris called voluntary commitments from tech companies “an initial step toward a safer AI future,” and to her credit, she’s right. It sure is a good first step, but it’s only that. Relying too heavily on these lukewarm measures assumes that companies will act in the public’s best interest without regulatory pressure.

But in practice, the money talks and the bottom line matters — OpenAI, for example, is reportedly removing control from its nonprofit board to restructure into a for-profit benefit corporation. Since its founding in 2015 as a nonprofit research lab with purely altruistic intentions, OpenAI’s two restructurings indicate a move beyond its initial mission for public benefit.

Silicon Valley’s AI race feels like a twisted, for-profit sequel to the Manhattan Project, but without the strict oversight. This time, we’re dealing with a technology as dangerous as nuclear weapons, built by engineers who answer only to their managers and investors, their compensation and their own conscience. That’s exactly the reality of today’s research.

Ultimately, I find that the Biden-Harris Administration’s response is neither proactive nor adequately reactive about reasonable and smart governance. It leaves me and others concerned about her candidacy and her leadership should she win the presidency.

Zunaer Sharang • Oct 23, 2024 at 7:10 pm

Really liked this piece