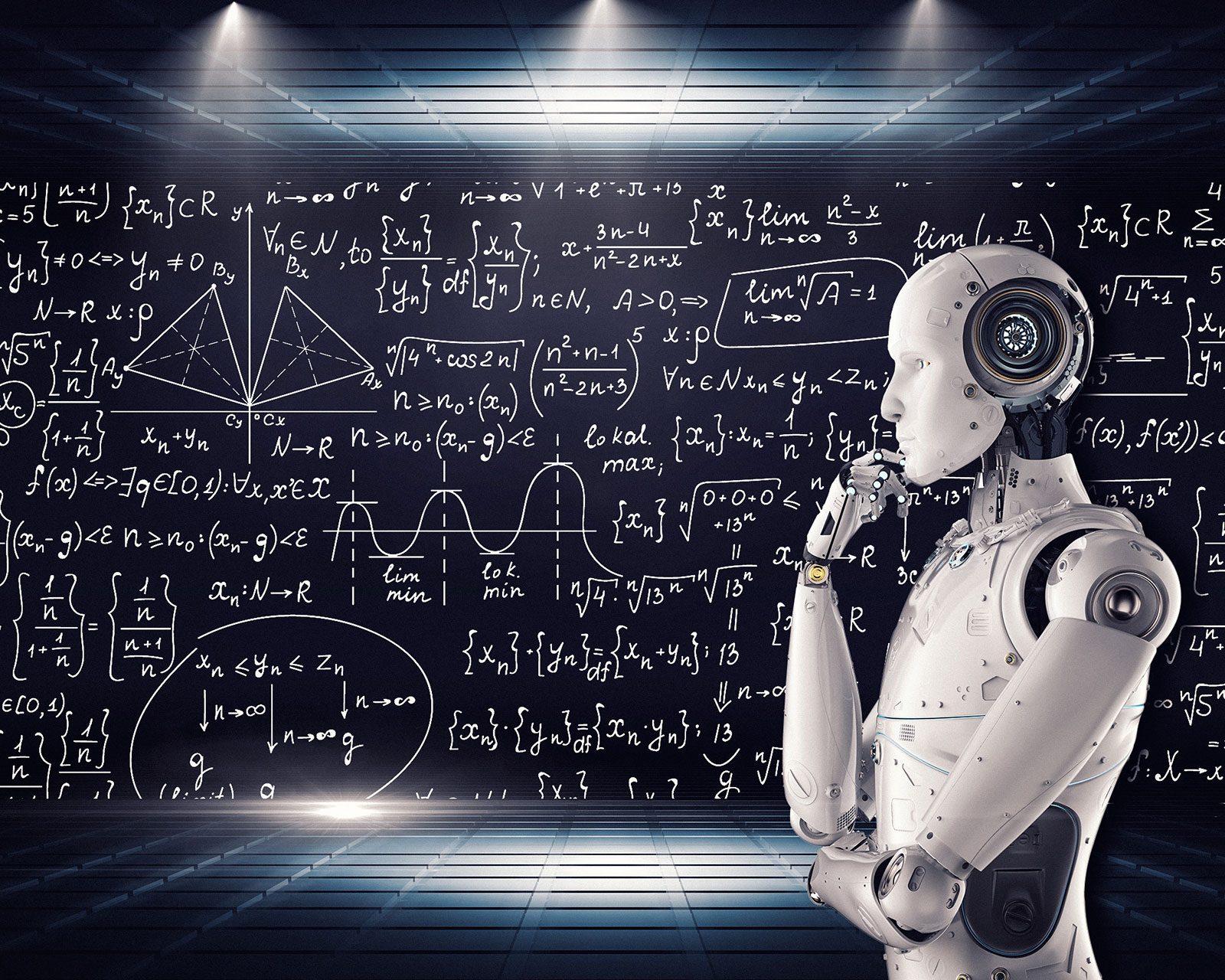

Artificial intelligence has infiltrated the lives of over 39 million Americans who have purchased smart speakers such as Amazon’s Echo or Alexa. Within the field of AI, another trend has developed: technology that is complex and inscrutable.

Kate Saenko, a professor in Boston University’s College of Arts and Sciences’ Department of Computer Science, delved into the networking behind AI machines and how the technology functions to make decisions.

“[AI is] a more powerful way to program a computer to do a task because, for a lot of intelligent tasks like recognizing faces, it’s very hard for a human being to write down a set of rules or code a set of instructions,” Saenko said.

She said that most of the time, humans don’t know how AI technology performs certain tasks such as facial recognition.

“While for some tasks, it’s easy to write a set of instructions for a machine to follow, for others, it’s very difficult,” Saenko said. “The power of AI or machine learning is that it can learn patterns from data from examples of tasks that it is doing that could be difficult for a human designer to come up with.”

However, Saenko explained that this can also lead to problems, because although humans build the AI and write the mathematical equations that make the AI function, the technology does not work like a human brain does. She emphasized the importance of studying and understanding how AI learns.

“The recent AI techniques have become so complex in terms of what rules they are learning, what patterns they are extrapolating from data, that it became more necessary,” Saenko said.

Saenko presented her recent work, “Explainable Neural Computation via Stack Neural Module Networks,” at the European Conference on Computer Vision in Munich, Germany Sept.11.

The paper was a collaboration with Trevor Darrell, professor of computer science at the University of California, Berkeley, Jacob Andreas, senior research scientist at Microsoft Semantic Machines in Berkeley, California, and Ronghang Hu, a Ph.D. student in computer science at UC Berkeley.

The experiment involved subjects viewing images that depicted the computer’s process, according to Saenko. They knew what question the AI was asked, but not what answer it gave.

The researchers then asked the subjects if they thought the AI got the answer correct and if they thought they could understand the AI’s process.

Saenko said if participants guessed whether the AI got the question right or wrong correctly at a probability greater than random chance, then the participants were deemed to understand the computer system’s decision-making process.

She addressed the issue of human bias in another experiment, “RISE: Randomized Input Sampling for Explanation of Black-box Models.” The researchers used computers to demonstrate what was learned and how the machine made decisions.

Saenko collaborated with Vitali Petsiuk, research assistant at BU, and postdoctoral researcher Abir Das on this paper. The research was presented at the 29th British Machine Vision Conference at Northumbria University Sept. 4.

“AI researchers always thought about this aspect — explainability and interoperability — but it’s become more urgent more recently because on one hand, AI models are becoming more complex and harder to explain, and on the other hand, they are being used more and more in our everyday lives,” Saenko said.

Adam Smith, computer science professor at BU, said concerns raised over a lack of understanding of AI are twofold. The AI “may fail in ways that we don’t really get.” As an example, Smith said developers must understand self-driving cars because any confusion over how they function could lead to disastrous consequences.

Smith also said more and more situations arise where automated decisions are made.

“As we automate decisions about people’s lives, like credit or bail, if you don’t understand how that system works, it makes it much easier to wind up in a situation where the system is treating people unfairly or introducing bias we don’t know is there,” Smith said.

Neil Goldader, a CAS sophomore studying computer science, said he thought Saenko’s research approached the field with a new perspective.

“I think that digging into the overlap between the human psyche and machine learning is really interesting, and [Saenko’s] research seems to be providing very tangible results,” Goldader said.