The rapid growth of generative artificial intelligence has sparked discussion on its place in the classroom, serving as a point of contention across different academic disciplines at Boston University.

While some professors and administration support the use of AI, others find it to be a concern.

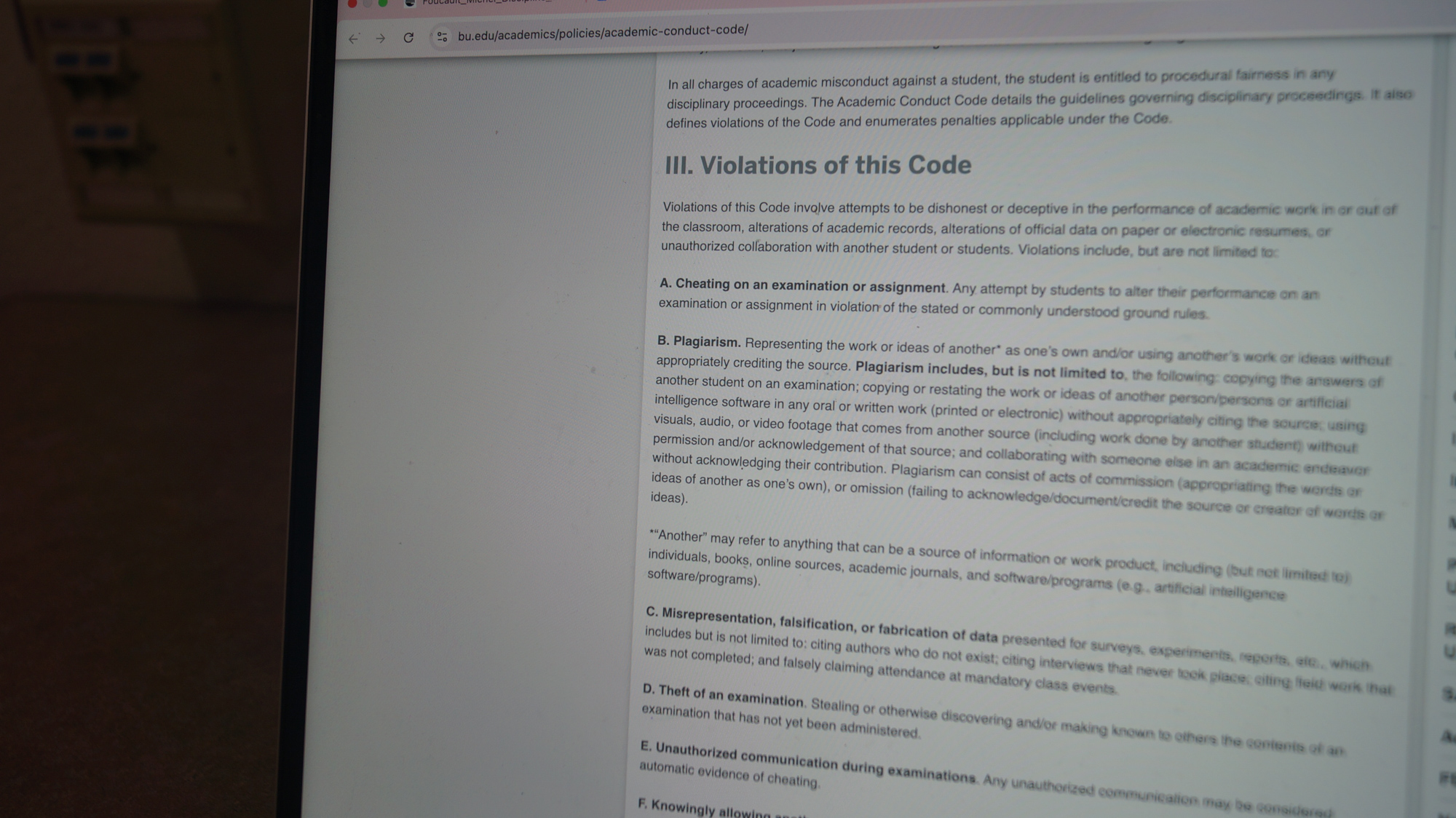

BU does not currently have an institutional policy regarding the use of AI, but considers representing the work of artificial intelligence as one’s own without appropriate credit to be plagiarism, according to the Academic Conduct Code.

In 2023, BU created a task force to examine the use of generative AI in the classroom and encouraged the University to “critically embrace” it, according to Yannis Paschalidis, distinguished professor of engineering and director of the Rafik B. Hariri Institute for Computing and Computational Science and Engineering.

Paschalidis, a co-leader of the task force, said artificial intelligence can be beneficial if properly used, citing test score improvements for engineering students.

“One would have expected that its use will negatively impact the students’ performance in exams,” Paschalidis wrote in an email to The Daily Free Press. “Instead, the opposite is happening. Students’ performance in exams has improved, highlighting the potential of AI to be used as an effective and personalized tutor.”

Paschalidis said learning language models can potentially improve student life and administrative work by answering questions on demand or automating and streamlining administrative functions, like finance and grant management.

Margaret Wallace, associate professor of the practice in media innovation and lead of the College of Communication AI Advisory Group, said artificial intelligence should be used as an additive, instead of replacing human creation.

“I really think it’s important for everyone to have familiarity and competency with these tools, but also to say ‘You know what, these tools are problematic, especially for the creative industries, and if I use it, where does it make sense for me to use these tools?’” Wallace said.

Wallace said she supports using AI tools in “structured” ways, such as designing class and graded assignments involving AI. Wallace also said she uses some of her $5,000 grant from the BU Digital Learning and Innovation Shipley Center to implement these tools within course curriculums.

Anne Danehy, senior associate dean for academic affairs at COM and associate professor of the practice, agrees generative AI can serve as a tool in the classroom to enhance learning experiences, despite challenges.

“We want to help our students understand that [AI] is a tool and it’s just a tool,” Danehy said. “We want to help them to learn about the ethical ways to use generative AI, how to think about it and to think about some of the challenges that are presented with it, so that they are well informed and they’ve had exposure.”

Danehy said COM aims to approach the use of generative AI in the curriculum similar to industry standards, giving students a career-focused outlook on AI. Danehy said the University even brought alumni to yearly retreats to discuss how AI is used in various media industries.

As a professor of mass communication, advertising and public relations, Danehy implements the use of AI into her own courses and said she sees AI as an assistive tool in helping students do research for classwork and projects.

“You can research infinitely and spend 100 hours doing this research,” Danehy said. “But why do you need to do that when you have a tool that’s going to help you to do that and give you more time to think about the meaning behind that data?”

While professors like Wallace and Danehy embrace the use of AI in the classroom, others find its implementation a slippery slope.

Erik Peinert, assistant professor of political science, understands the conflicting views surrounding AI’s classroom implementation but opted to ban its use in the classes he teaches.

“Because it’s hard to catch [AI usage] 100% of the time, if you open the door to its minor uses, you end up in a class of say, 40 people, with 15 papers that were transparently written by AI with pristine, but pretty vacuous language,” Peinert said.

Peinert said AI may be more useful for reinforcing ideas students have already learned and have a good understanding of, especially for complex classes and themes, as opposed to helping students learn material.

“Writing is not just hammering out words on a page. It’s thinking,” Peinert said. “It’s putting together ideas in new ways, and being able to articulate your own thoughts in your head, and those are things that AI just can’t do for you. Once you know how to do them very well, it can assist you, and will be a very large force multiplier in helping you do those better later.