The president of Stanford University, Marc Tessier-Lavigne, stepped down on Aug. 31, 2023 — not because of retirement or personal reasons, but because 12 reports that listed him as an author contained wrong or manipulated information.

In the end, an independent panel found he wasn’t aware of or directly involved in the misrepresentation of the science. Nevertheless, he was still involved in the processes. At the time of stepping down, Tessier-Levigne stated he planned to retract or issue corrections for the papers in which he was listed as the first author.

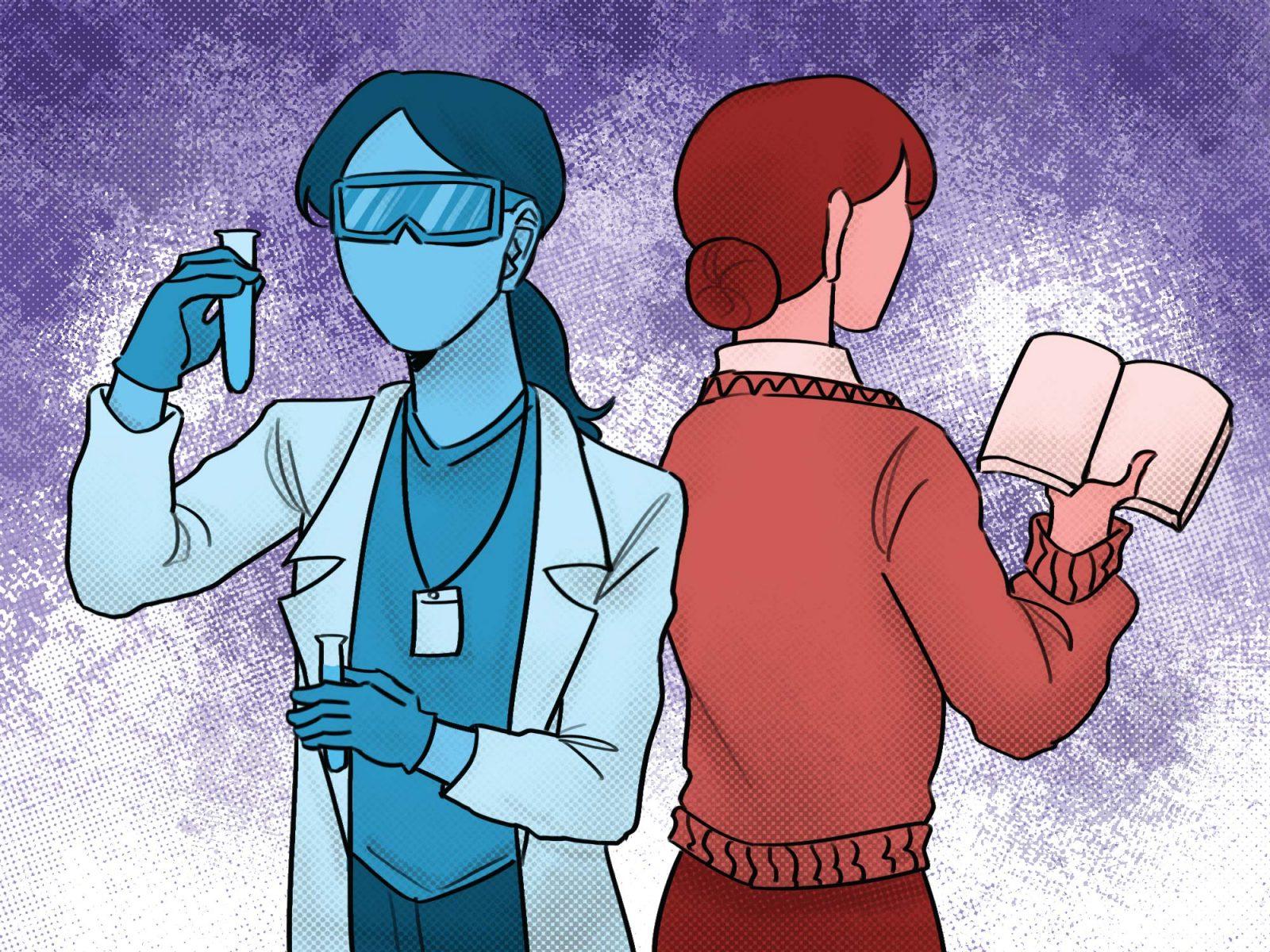

The Stanford scandal is not an isolated event. It represents a microcosm of many of the problems related to publishing within the scientific community.

For example, reproducibility: the ability for other scientists to carry out an experiment and yield the same results. In the words of Marcus Munafo, a biological psychologist, “we want to be discovering new things but not generating too many false leads.”

Valuing reproducibility means walking the tightrope between generating new, interesting ideas in science, while still keeping said results reliable and well-tested to ensure they aren’t just flukes.

But, there’s not a good consensus on where that line can be drawn.

A 2016 survey by the science journal Nature found that “more than 70% of researchers have tried and failed to reproduce another scientist’s experiments, and more than half have failed to reproduce their own experiments.”

There are several reasons why this could happen. Some are more bureaucratic, such as inconsistent experimental protocols. Some reasons are more pernicious.

Nature also reported that “more than 60% of respondents said that each of two factors — pressure to publish and selective reporting — always or often contributed” to reproducibility problems.

External pressure to publish is a huge weight for many academics and directly contributes to “publish or perish” culture — the notion that if researchers don’t pump out papers, they will be fired or discredited.

This demand can lead to unethical decisions, such as falsifying data or cherry picking results.

If there’s one thing I’ve learned from my math degree so far, it’s how incredibly easy it is to twist statistics to your own advantage. Researchers can easily throw out data or weigh certain data points more heavily to influence the statistical significance of their methods.

Sometimes tests are just used incorrectly. A lot of statistical tests, like a z-test or ANOVA, have specific assumptions that go along with them. The data has to follow certain distributions and rules for different methods to be appropriate. But, the reality is that empirical data is often messy and imperfect, and a lot of times a sample just isn’t right for a certain test.

People can misuse statistics in a couple ways. Some fields don’t emphasize using higher level statistics or teach how to properly account for certain types of samples. On the other hand, sometimes handling your data correctly won’t lead to any interesting conclusions — the one thing that academics need to publish.

Publish or perish culture has consequences: it’s hard to build off of knowledge that’s manipulated, incorrect or made up to begin with.

The Guardian reported that in 2023, research journals retracted a record number of academic papers — more than 10,000.

Some scientists are calling the trend a “crisis” as the rise in falsified papers and information leads to corrupted research.

In an ideal world, everything that is published would have gone through thorough reviews and testing. It would be completely dependable. Unfortunately, that’s not the case.

You can easily see the slippery slope effect.

It’s great to publish more papers and contribute more to the field until all of a sudden it’s the only thing that matters: institutions start pressing researchers to churn out as much research as possible, leading to detrimental effects on quality.

As someone who wants to break into the research field, it feels sort of ambiguously hopeless — an institutional problem that incentivises you to cut corners lest you fall behind your peers.

There’s no easy way to fix such a widespread, exponentially worsening problem.

Long term, we need to tighten up how papers and ideas are approved and more rigorously examine the policies and attitudes that incentivize wrongful scientific practices.

Because it’s not just unknowns who are breaching these ethical practices.

It’s the credible and well-respected that can get away with it for years.

Plus, to make matters worse, these researchers train and teach up-and-coming scientists to use these poor practices. People who are young, inexperienced and often don’t know any better.

There’s still hope — some of these people are caught. But, a lot of academics never face repercussions.

We have to tighten up our research standards and practices before the steady foundation we build our science upon vanishes for good.

CORRECTION: A previous version of this article stated that Tessier-Lavigne and Ivy League professor breached ethical practices. This has not been confirmed to be true. The updated article reflects this change.