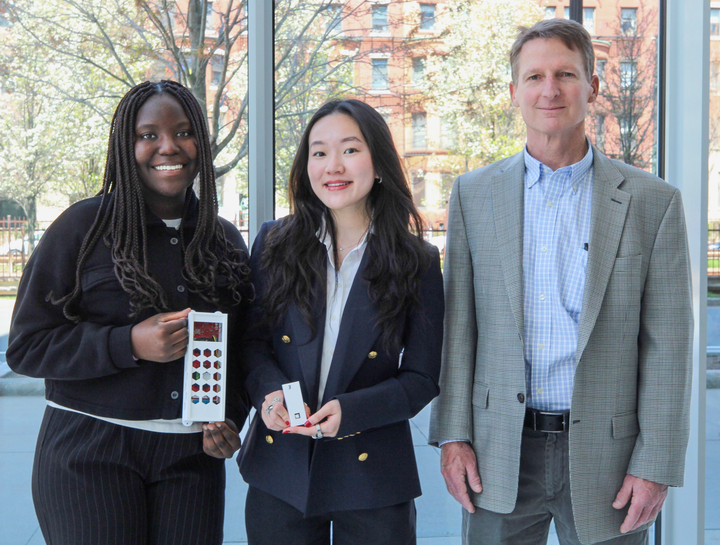

One of the biggest issues plaguing the internet today, and stumping researchers across the globe, is misinformation. But, a team of researchers led by Boston University professor Marshall Van Alstyne, propose a solution. It lies in the intersection of tech and behavioral science.

Van Alstyne, a Questrom School of Business professor of information systems, said his team received a large grant from The National Science Foundation, an independent federal agency that supports science and engineering across the United States, to “explore the question of using markets to address misinformation.” With the grant of $550,000, the team hopes to find an “antidote” to fake news, while maintaining free speech and avoiding censorship, according to the Faculty of Computing and Data Science website.

Van Alstyne said some of society’s biggest problems — global warming, the pandemic, questions of presidential legitimacy and conflict in the Middle East — can’t be solved if fake news is allowed to run amok.

“We can’t find solutions if we can’t agree on basic facts,” Van Alstyne said. “The current solutions don’t seem to be working and we needed different ones.”

Van Alystne and his team propose to shift the burden of truth.

“Usually, [researchers try] to put the burden on the user to make more decisions about this. Or sometimes they’ll put the burden on the platform to intercept and then censor when, in fact, the party that knows the truth of the statement typically is the author of that statement,” he said.

Swapneel Mehta, a postdoctoral researcher at BU and a member of the team, said social media has become as “interconnected as an information ecosystem.”

“When there are harms on one platform, they typically translate into different versions of harms on other platforms,” he said.

Instead of a content evaluation centered in a specific platform’s database, this developing program will reach beyond and bring third-party resources to the original statements, adding a layer of liability to telling the truth.

Supported by Questrom marketing professor Nina Mazar and David Rand, Massachusetts Institute of Technology professor of management science and brain and cognitive sciences, along with other researchers and professors with expertise in various fields, the research allows for a holistic approach to developing a mechanism that encourages truth.

The theory behind this research is rooted in a speaker-listener relationship that parallels that of buyer-seller. At the very heart of the United States economic system is the idea of acting in accordance with self-interest, Van Alstyne said.

“Decentralized choices make the best use of their private knowledge,” he said. “Decentralized choices of buyers and suppliers gives you a social optimum.”

The substantial team is made up of researchers with expertise in psychology, data science and business and engineering, as well as experts in misinformation at Cornell University and MIT.

The diversity in the researchers’ fields directly adds complexity to the situation, bringing the early stages of theory and research “as close to being practically useful as possible,” he said.

On the engineering side, the team developed “machine learning generative models, multi-agent simulations, in coordination with a large volume of public digital trace data,” Mehta said.

Tejovan Parker, a second-year PhD student studying computing and data science at BU and a graduate research assistant of the team, said in order to discuss fake news, a new “vocabulary” or a “conceptual map” is needed. The use of AI can help make this a reality.

“It’s hard to do research in this field without natural language processing because everything works at this massive scale,” Parker said. “You’d have to have an army of undergrad research assistants manually categorizing with all of their bias.”

While the research is still in its early stages of development, the team has high hopes for the future.

According to Van Alstyne, the team hopes to have an internally-working code by the end of the fall semester and first test results within the year.

If successful, this research could serve as the jumping-off point for a new way of understanding and distributing information. Restoring credibility and establishing a self-ensuring standard of accountability in media outlets will not only allow for better information but better communication as a whole.

“If it works, it’s a hell of a theory,” Van Alstyne said. “But that’s what we’ve got to prove.”

Emma • Oct 26, 2023 at 12:00 pm

Lovely article!

Sam & Kelle Albrecht • Oct 26, 2023 at 11:23 am

Very timely and topical article. As ‘X’ continues on a path to disseminating false narratives, it is crucial for institutions to search and promote accuracy in reported news. Thank you to the author for shining a light on this important topic.