With ChatGPT and OpenAI becoming household names over the last few years, educational institutions like Boston University have been faced with a pressing question: Is it time to embrace generative AI in the classroom?

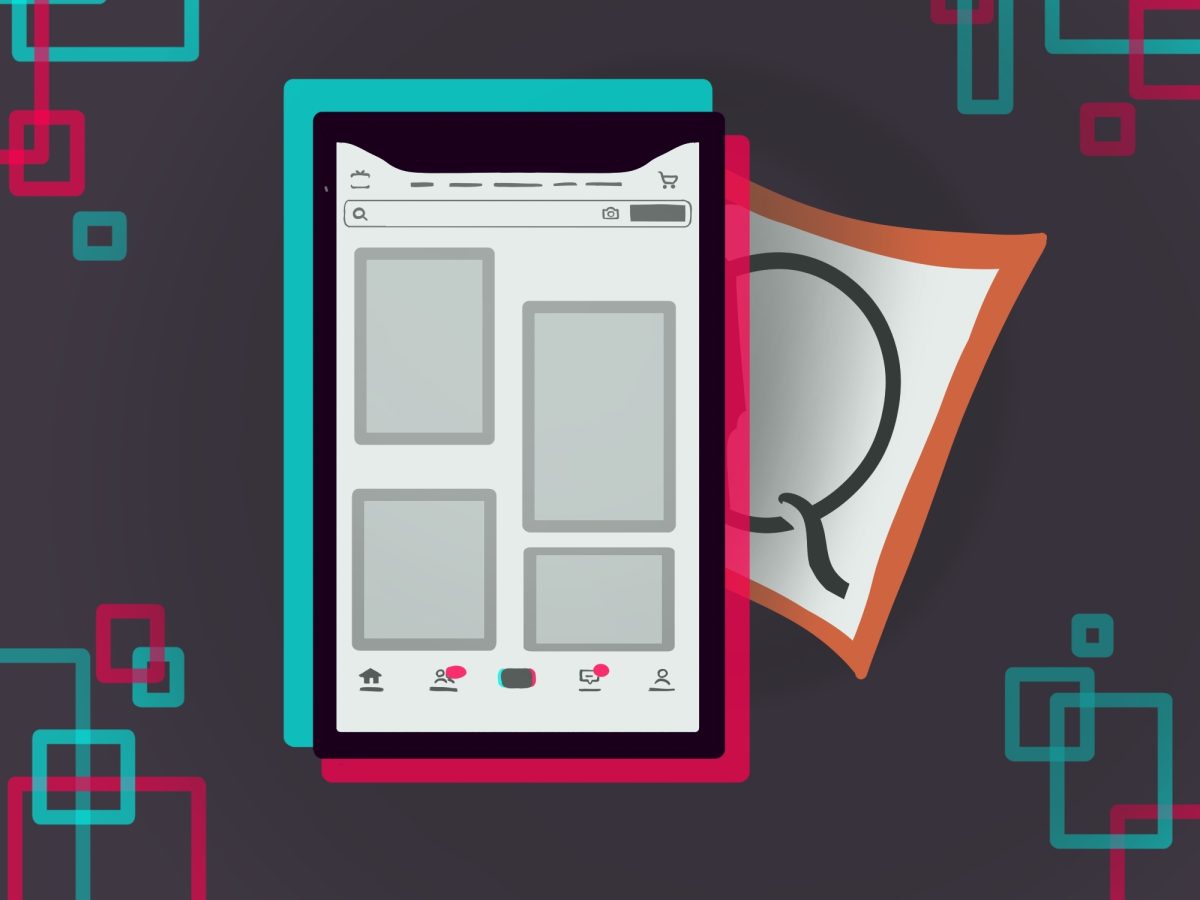

BU’s Artificial Intelligence Development Accelerator team provided an answer with its latest project: TerrierGPT, a generative AI chatbot consolidating multiple paid AI models, which will be free for students.

Users can choose from a variety of tools, including large language models from OpenAI, Anthropic, Google, Meta and Amazon, giving them flexibility to choose a model that best suits their needs, said AIDA Co-Director Yannis Paschalidis.

John Byers, the other co-director of AIDA, said the group’s goal with this project is to “democratize” access to a range of leading AI models.

“We see some students have access to AI. Others don’t have any access to AI,” Byers said. “We wanted to bring something to students that would give them access to a range of tools.”

TerrierGPT offers a “secure platform for exploring generative AI,” to address gaps in data protections that many commercial AI chatbots have, AIDA Interim Chief AI Officer Bob Graham wrote in an email to The Daily Free Press.

“Our goal was to foster innovation and learning, providing a trusted GenAI resource tailored for the entire BU community,” Graham wrote.

TerrierGPT began taking shape in late December 2024, wrote Graham, who led its implementation. AIDA built TerrierGPT using LibreChat, a software combining the latest AI technology. LibreChat is open source, meaning anyone can use, modify or distribute it without restrictions or paid subscriptions.

After choosing LibreChat as TerrierGPT’s foundation, Graham assembled a volunteer team of 15 University experts to “deliver a secure, scalable, and enterprise-grade solution,” he wrote. By March, enterprise agreements were secured with major large language model vendors.

The enterprise agreements give TerrierGPT a key advantage, Paschalidis said.

Unlike personal ChatGPT accounts, where data imported could be used to train future models, TerrierGPT keeps all information — including sensitive or proprietary data — secure within BU’s system.

Following the first production release to the implementation team in April, TerrierGPT was made available to faculty and staff in June and then to students later that month, Graham wrote.

BU has already made strides in AI innovation, ranking 26th among the most AI-focused universities in the United States, according to data from Semantic Scholar. This is not the first time BU faculty have integrated generative AI into the classroom — it’s simply the next step.

Over the last few years, some professors have not only introduced generative AI to their students but also encouraged them to experiment with it.

Margaret Wallace, an associate professor of the practice in media innovation at the College of Communication, provided three of her classes with premium ChatGPT during the 2024-25 academic year. She funded this with a $5,000 grant from the Shipley Center, a BU program supporting faculty-driven innovation in the classroom.

“[Generative AI] really can be used in a way that’s transformative,” she said. “I just thought it would be a great opportunity to make this available to students in the interactive media classes.”

Wallace plans to use TerrierGPT in similar ways.

“It’s been so cool to see how it’s being regularly updated, how it’s incorporating different models, how it’s handling data privacy,” she said. “I’m super excited about the fact that we have TerrierGPT as a resource.”

But using generative AI comes with challenges like “hallucinations,” when the system “provides users with fabricated data that appears authentic,” according to MIT Sloan Technologies.

Graham wrote that TerrierGPT is designed not only to support learning and research, but also to teach users “both the capabilities and limitations” of generative AI.

“We hope that students, faculty, and staff will use TerrierGPT to better understand what Generative AI is and isn’t,” he wrote.

Therefore, users are encouraged to take a “critical embrace,” meaning they should be “very cognizant of the limitations of these models” and their “tendency to hallucinate,” Paschalidis said.

To encourage critical embrace from users, AIDA plans to organize an online course within the next few months to teach students how to utilize TerrierGPT and discuss ethical issues and responsible use.

“There’s an awful lot to know about GenAI, and I think people have a huge range of backgrounds, [from] knowing a lot about the models … to knowing very little,” he said. “This [course] is more about giving a grounding, giving a first primer, so people can effectively use these generative AI tools.”

TerrierGPT’s introduction to the classroom has brought both new opportunities and ethical debate.

Junior Estelle Morris urged others to file a complaint against the platform through its feedback form in an Instagram story posted on July 28.

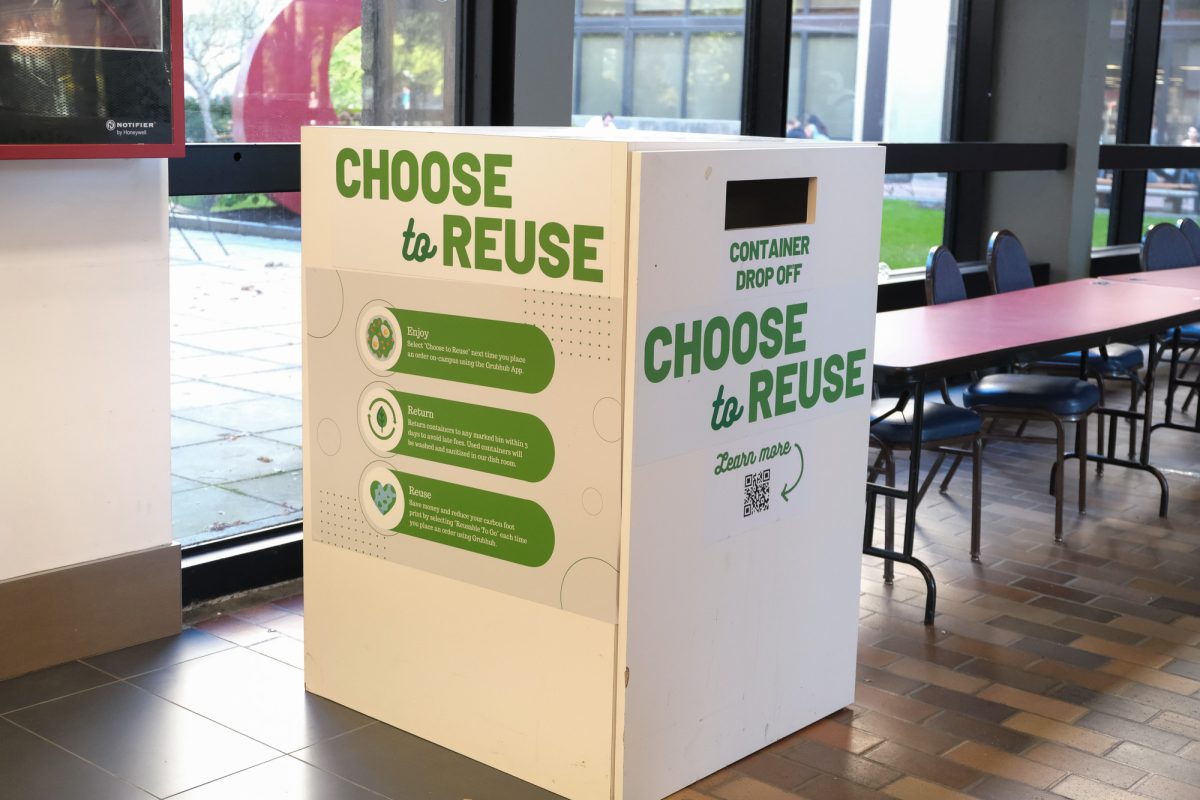

“It was just very jarring as a school that boasts academic integrity and also pledges to be carbon neutral by [2040],” she said.

Morris received a reply Aug. 19 from BU’s IT Help Center, which she described as “vague and minimal.” The response directed her to AIDA’s Frequently Asked Questions page on AI in education.

The page states training a generative AI model consumes “many orders of magnitude more energy” than using it to ask a query. Morris said while this is true, asking a query still uses a lot of energy, so BU’s response is “not really taking accountability.”

In the classroom, BU professors have set varying rules around AI tools like ChatGPT, with some specifying usage guidelines in their course syllabi.

Paschalidis said AIDA does not intend to dictate how professors or students use generative AI and emphasized that academic freedom gives instructors full discretion over its integration.

“What we have suggested is that individual instructors and faculty will make their own decisions, but at least they should be able to articulate to their students why they made a specific decision,” he said.

The FAQ page states AIDA “encourages mindful use” of generative AI, meaning it isn’t intended to “replace all work.”

However, Morris said she predicts many students will rely on the AI technology to write essays, despite risks of inaccurate information. She said BU should instead encourage students to use library resources and conduct their own research.

“I genuinely, sincerely believe that they could be putting their AI investment into many other beneficial things for the school,” Morris said.