When people hear the word “deepfake,” they might associate it with political manipulation — AI-generated candidates saying politically contentious things, faking endorsements or even completely fabricating speeches.

While this threat is entirely real and relevant, it detracts from one that is disproportionately more common and could have possibly affected someone you know.

According to a 2023 report from Home Security Heroes, pornographic deepfakes assume 98% of total deepfake content, and 99% specifically target women. In many cases, “nudifying” models additionally won’t even work on images of boys and men, as stated in a 2024 survey from Internet Matters.

This particular crisis isn’t unfolding in campaign war rooms or parliaments — rather, on the personal devices in dorms, high schools and spread in group chats.

As with most tech-enabled harm, the problem isn’t just intent but also infrastructure. Platforms like ClothOff and Nudify openly advertise the ability to undress anyone using generative AI.

In 2023, several male students at Westfield High School in New Jersey were accused of using an AI app to create nonconsensual pornographic images of their female classmates, which were then circulated amongst group chats and social media platforms.

Due to underdeveloped state laws on synthetic pornography at the time, prosecutors struggled to bring charges to the perpetrators in this case. The creation of the pornographic media didn’t require any specialized skills — just a photo and a credit card, at the expense of unwilling victims.

These incidents aren’t solely confined to young men and women.

A 2025 survey by Girlguiding found that 26% of girls aged 13 to 18 had seen sexually explicit deepfake images of celebrities, friends, teachers or themselves. Additionally, a 2023 survey by Thorn discovered approximately one in 10 children aged nine to 17 knew of peers who had used AI to create nudes of others.

The Internet Matters survey found that 13% of teenagers in the United Kingdom had encountered nude deepfakes, with more than half of them believing it would be worse to have a deepfake nude created and shared of them than a real image — indicative of the significant level of concern among teenagers about the potential impact of such content.

After all, many instances of ClothOff usage have been from social media posts online and have even been made of girls with private accounts. Images can be taken from LinkedIn, school publications or even a passing picture in class. The fault does not rest on girls for having an internet presence — but rather the lack of infrastructure in place, preventing the abuse of these images.

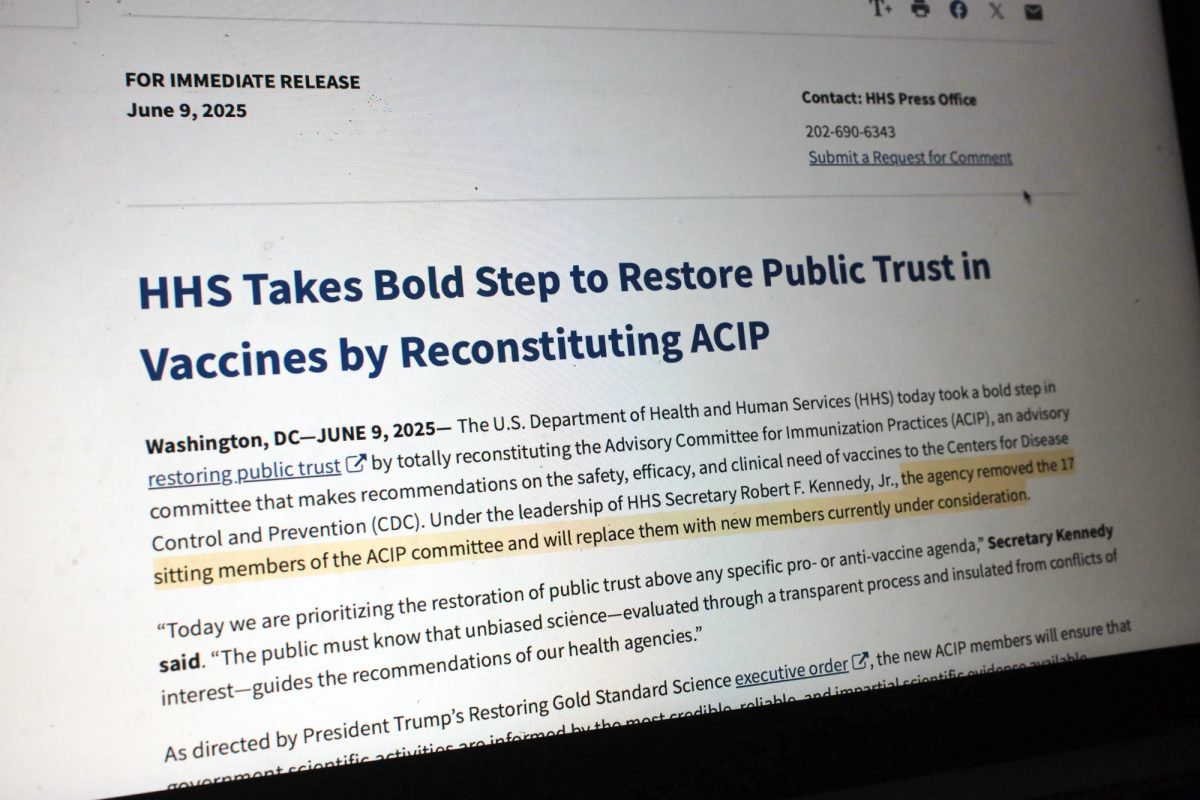

This technology is ahead of the law almost everywhere. In the U.S., the Take It Down Act, enacted in May of 2025, outlawed the publication of synthetic pornography, which is a monumental first step but a limited one.

Only a handful of states — Virginia, California, New Jersey, Minnesota and Texas — have criminal statutes specifically addressing deepfake porn, and few schools or universities have incorporated these protections into their Title IX enforcement. According to the Center for Democracy and Technology, only 36% of teachers said their school has a fair process in place that adequately supports victims of deepfake, nonconsensual content.

Compare that to South Korea, where authorities have criminalized the creation and possession of nonconsensual deepfake porn, and the U.K., which is moving to make creation a criminal offense punishable by up to two years in prison.

These countries are acknowledging what many students already know: Deepfake porn isn’t a fringe offense, but rather a normalized form of harassment and social control, and laws mean little without systems willing to enforce them.

For students, the real failure lies not just in the gaps between state laws but also in the absence of institutional accountability. Title IX, which prohibits sex-based discrimination and harassment in education, already gives schools the authority to discipline students who create or share sexualized images without given consent, according to the U.S. Department of Education.

Despite this, most universities treat deepfake pornography as a one-off accident rather than a civil rights violation. Enforcement shouldn’t just be reactive investigations, but rather inclusion of clear disciplinary guidelines for perpetrators, counseling and removal processes for victims and mandatory digital ethics education.

Until schools are able to treat synthetic sexual abuse with the same seriousness as other forms of sexual misconduct, the Take It Down Act will only remain symbolic protection — not substantitive justice.

The conversation around deepfakes will keep centering elections and disinformation unless students, policymakers and institutions force a shift. The real threat isn’t hypothetical political chaos, but rather the fact that someone in your class, fraternity or club could generate a violating image of you instantly without facing any meaningful consequences.

Until policy, infrastructure and campuses treat this as the crisis it is, the abuse will continue to remain invisible by design.