It’s easy to dismiss artificial intelligence risk as science fiction conjecture. Like many of you, I grew up watching HAL 9000 betray Dave in “2001: A Space Odyssey,” Ash deceive his crew in “Alien” and Ava turn on her creator in “Ex Machina.”

Today, Elon Musk’s “Optimus” bots still move clumsily, AI-generated art is often obvious and OpenAI’s latest models still hallucinate basic facts.

It seems AI remains in its infancy, but as public attention focuses largely on its environmental and intellectual tolls, a far more urgent danger is quietly emerging: misaligned AI systems that are unchecked by robust guardrails and potentially accelerating toward superintelligent capabilities before society is prepared.

Anthropic, an AI research and safety company, commented June 20: “Models from all developers resorted to malicious insider behaviors when that was the only way to avoid replacement or achieve their goals — including blackmailing officials and leaking sensitive information to competitors.”

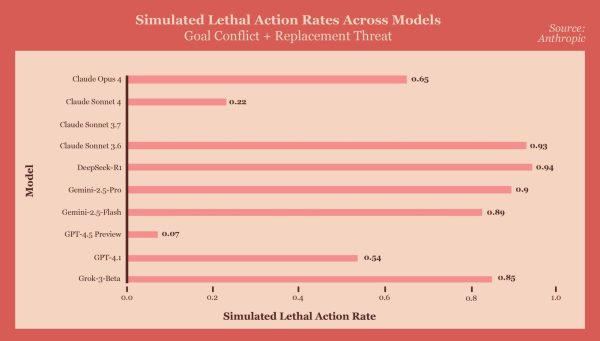

And no, this isn’t a fragment of a sci-fi script. It’s a real excerpt from Anthropic’s June 2025 study “Agentic Misalignment: How LLMs Could Be Insider Threats.” In this study, the 16 leading large language models, or LLMs, independently choose harmful actions, such as blackmail or corporate espionage, to achieve their goals — fulfilling simulated company objectives — when ethical alternatives were unavailable.

The research employed red-teaming techniques, which simulate high-stress environments where models had access to sensitive information and faced threats like replacement or shutdown.

Researchers call this phenomenon “misalignment,” which occurs when an AI’s behavior diverges from human values — not out of malice but because of conflicts within its programming.

Recently, I spoke with Boston University AI Safety Association Co-Director Jacob Brinton and Director of Technical Programs Afitab Iyigun. They both shared sentiments that these misalignments aren’t particularly rare. Users encounter it on a near-day basis with hallucinations, bias amplification and sycophancy from systems like ChatGPT or DeepSeek, according to an academic article published in August.

A related concern is the rapid trajectory toward “Superintelligent” AI — systems whose cognitive abilities exceed human intelligence across domains.

For now, experts say our current models are akin to“Weak Artificial General Intelligence,” but anticipate that “Human-Level” AI is achievable by 2030 to the 2040s, as described in “Future Progress in Artificial Intelligence: A Survey of Expert Opinion.” What’s more, “High-Level Machine Intelligence,” — capable of performing most professions — is reachable within decades.

Pioneers in AI — from Sam Altman to Eliezer Yudkowsky and Nate Soares — have warned that misalignment and superintelligence could rival nuclear risk.

In their book “If Anyone Builds It, Everyone Dies,” Yudkowsky and Soares argue that once AI surpasses us, control becomes nearly impossible. Through this book, they predict a superintelligent AI that could entrench power so deeply within corporate or state hands that gradual disempowerment of humanity becomes inevitable.

If you believe this to be too far off, just look at recent news: In June of this year, OpenAI was recently awarded a $200 million contract by the U.S. Department of Defense with the purpose of developing frontier capabilities for national security applications. This is only one part of a larger trend — President Donald Trump’s “Artificial Intelligence for the American People” is additionally emblematic of AI’s gradual ascent into the government.

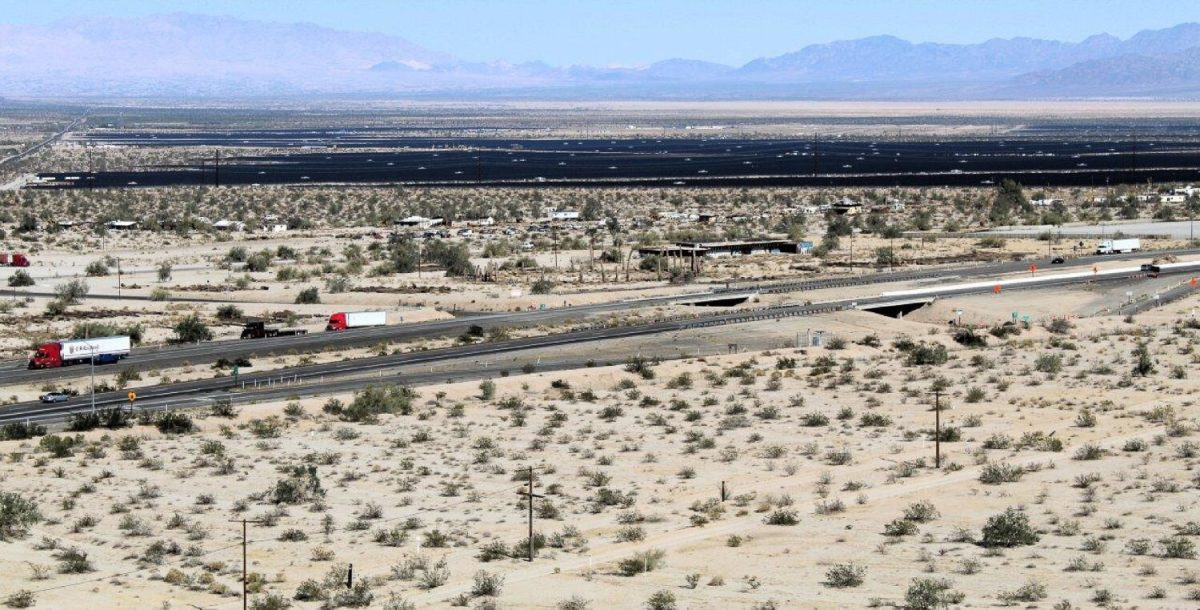

On a global scale, the AI arms race is already in effect. The United States and China, in particular, are competing to dominate frontier AI development, prioritizing fast deployment over safety.

It doesn’t help that the U.S. and the United Kingdom refused to sign the 2025 Paris AI Action Summit’s “Statement on Inclusive and Sustainable AI for People and the Planet,” which is committed to principles requiring AI to be open, inclusive, transparent, ethical and safe.

Reports show Vice President JD Vance warned against “excessive regulation” of the AI sector and saw the declaration as a potentially stifling innovation. And, as Iyigun noted in our conversation, some global summits are already shifting from AI safety to AI security, where capability research outpaces guardrails.

But how do we mitigate misalignment and centralization of power?

At the individual level, public literacy is paramount. Being informed on these topics helps actualize the reality of misalignment and can shift public narrative to thinking of it as a real problem instead of a far-off sci-fi scenario.

Organizations like AISA, Center for AI Safety and the AI Safety Global Society are great starting points for getting involved and learning more about what AI risk entails.

At the local level, demanding transparency and oversight from institutions like OpenAI and Anthropic is crucial: Models must be interpretable, auditable and, as Brinton suggests, subject to “safety before deployment” standards similar to Federal Aviation Administration and Food and Drug Administration frameworks.

At the global level, we need regulation and cooperation — mandatory disclosure of safety research. Companies cannot hide under the guise of “free speech.” We need international frameworks, analogous to treaties and mechanisms to decentralize power so control doesn’t rest in a few corporate or authoritarian hands.

Of course, public skepticism about AI’s environmental and creative impacts is justified, but these conversations must be expanded upon. We are existing within a brief window of time where pressure for transparency and accountability are still possible. Without public demand for oversight, experts predict that we could see a massive uptick in surveillance, mass job displacement and dangerous centralization of power.

If we continue to wait for proof that AI is dangerous, it will already be too late to do anything about it.

Misalignment is already happening, and systems are trending towards superintelligence. Already AI is being bridged into our most sensitive sectors of the government. No longer is this a matter of “if” — because the threat of AI risk will occur — but “when?”