Award-winning journalists, students, professors and other experts congregated at Northeastern University’s Raytheon Amphitheater Friday to explore a dark side to artificial intelligence: deep fakes. The conference, titled “AI, Media and the Threat to Democracy,” addressed the rise of AI in the media and its role in democracy.

Northeastern University professors Matt Carroll, David Lazer, Woodrow Hartzog and Meg Heckman organized the conference with Aleszu Bajak, journalist and Northeastern University faculty member.

Carroll said he was motivated to help plan the conference because he saw the potential AI had to revolutionize journalism.

“A lot of people within our department are interested in this [artificial intelligence],” Carroll said. “It’s clearly becoming something of interest to the broader journalism world.”

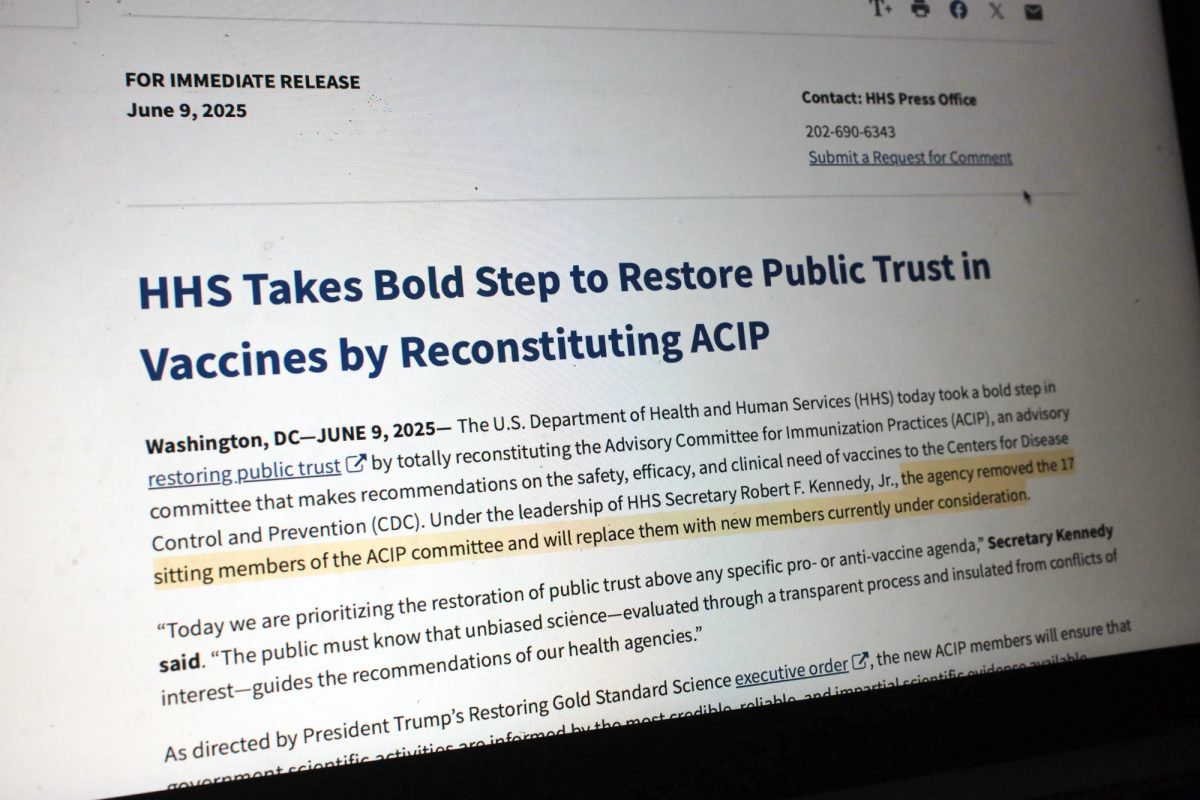

The keynote speaker was Danielle Citron, Morton and Sophia Macht Professor of Law at the University of Maryland’s Francis King Carey School of Law. Citron addressed the rise of “deep fakes,” sophisticated fake audio and video that can be easily produced by people with access to the technology.

Citron warned the audience that the democratization of this technology could have devastating effects on the political process. She discussed the possibility of a fabricated video that incriminates or embarrasses a political candidate surfacing the night before an election.

“The central harm to democracy is the way it sews distrust in the world around us as well as in institutions, journalists and the media being the central [institutions] when we think about democracy,” she said in an interview.

Citron fears that if individuals cannot discern what is real and what is fake, powerful figures will be able to take advantage of this ambiguity and then claim that any damaging depiction of themselves is fake. She called this the “liars dividend.”

“It’s to claim everything’s a lie even though you know very well it’s true,” Citron said. “[Deep fakes] make it much easier to say, ‘Oh, [that] was a faked video of me, that wasn’t me.’”

Citron said she is also concerned about an erosion of privacy. Market pressures could coerce people to reveal private information to prove that a damaging video of them is fake, she said.

To combat deep fakes, Citron explained that people need to look at the bottlenecks, the platforms that have the power to control the spread of deep fakes and target Section 230 of the Communications Decency Act. The section provides immunity from liability for “interactive computer service” who publish information from third party users.

Citron has several points of advice for regular individuals.

“Have some skepticism,” Citron said. “If something seems too sensational to be believed, we should think twice. … If you suspect that what you’re seeing is a forgery, a deep fake, then report it to the platform … engage in counter speak, and challenge it.”

Following Citron’s keynote, there was a panel discussion about the effect of artificial intelligence on journalism.

The panel outlined some of the potential advancements that artificial intelligence brings to journalism, such as faster news responses to emergencies, social listening — using public conversations to understand how people feel about issues — and the ability to search large data sets for patterns.

John Wihbey, a journalism professor at Northeastern University who introduced Citron to the podium, said he felt the role of journalists will gain importance in the coming decades.

“As more open data is made available on the web … the most important data in this world will be hard to get,” Wihbey said in an interview. “That could mean accountability data that an investigative journalist helped unearth, but it could also be other forms of unique data and data sets. Journalists are perfectly positioned to be the locksmiths in this ecosystem.”

After the journalism panel, the third panel emphasized the increasing role of algorithms in people’s daily lives. They discussed the ways that social media sites manipulate search results through the use of algorithms, sometimes to serve the public good.

The final panel focused on the difficult task of regulating artificial intelligence in the media. The panelists discussed potential legal and policy solutions, as well the potential costs to freedom and privacy that would come with regulation.

“The bottom line is there is some kind of consent of the governed implicit in power,” Wihbey said. “If, increasingly, decisions that implicate power are performed by what they call ‘black box technology’ — neural networks, deep learning networks that are making very important and complex decisions instantaneously — that could be troubling over time.”