Facial recognition technology continues to develop and gain popularity. From Facebook’s ability to automatically tag friends in photos to law enforcement’s capacity to catch shoplifters, facial recognition technology appears in many realms of modern life.

The Division of Emerging Media Studies of Boston University’s College of Communication hosted a symposium, “Face-Off: Facial Recognition Technologies and Humanity in an Era of Big Data,” April 18 at the Florence and Chafetz Hillel House to address this technology’s ever-growing prevalence.

Professionals from around the world presented perspectives on the advancement of facial recognition.

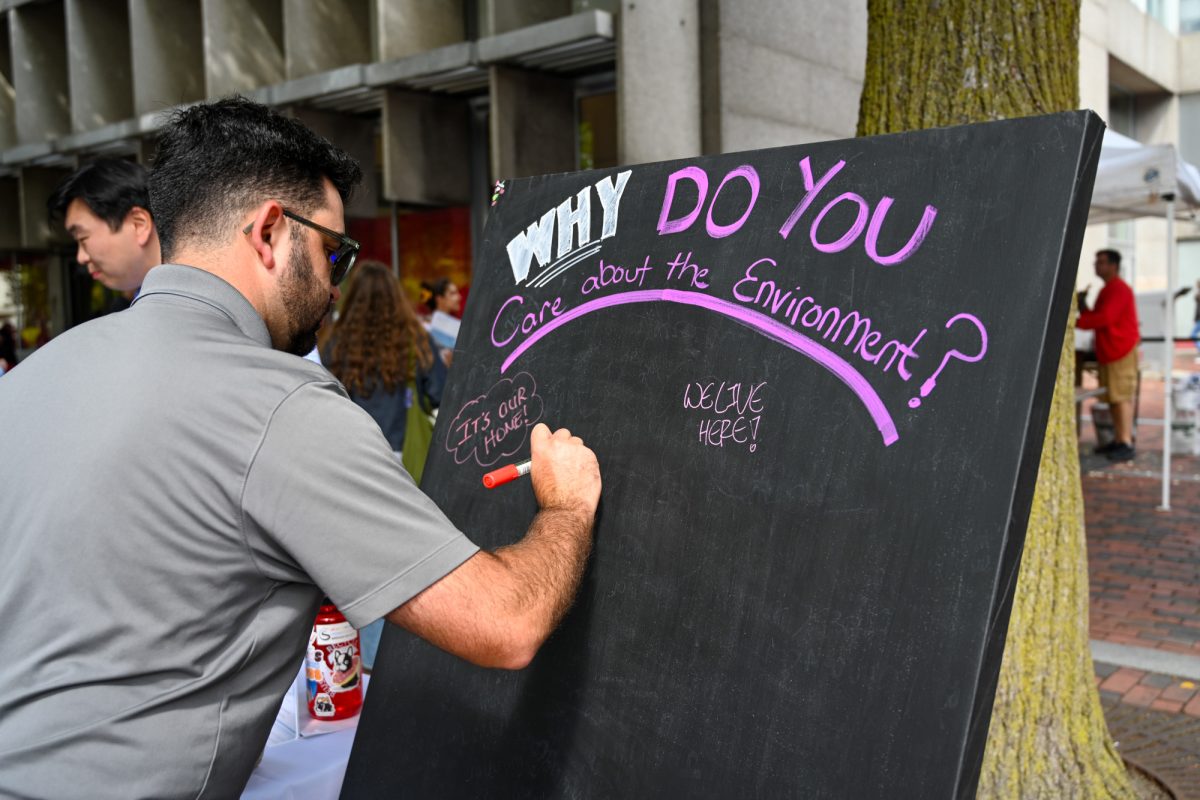

Muyang Zhou, a graduate student in BU’s Emerging Media studies program, attended the conference as part of her studies. She said she was inspired by the variety of speakers that spanned from philosophers to computer scientists.

“I think [the speakers are] a very good combination of researchers from all walks of [the] research field,” Zhou said.

Zhou said that she especially admired the computer scientists presenting at “Face-Off” for their humanitarian focus, which she said she didn’t expect them to concentrate on.

“Many of them were talking about data safety, data privacy and ethical problems related to data and algorithms,” Zhou said. “[They] do basic science research and care about humanity.”

Margrit Betke, a speaker at the conference and professor in the Department of Computer Science, described the inner workings of facial recognition technology in an interview. Her research focuses on related topics like face analysis technology.

“Nowadays we use deep neural networks, and they are trained on some data set of faces where you know the name or [identification] of the person, and then when they are used,” she said. “The network analyzes an unknown face and matches it up with the closest match in a database of faces.”

Prior to the conference, Zhou said she didn’t see ways facial recognition technology could apply to everyday life, but the conference also changed her perception.

“I thought the applications were limited to [places like] the airport [for] citizens’ safety, but I didn’t really know that there are so many applications of facial recognition,” Zhou said.

Betke listed several applications of facial recognition in daily activities.

“Members of the law enforcement, such as police officers, can have access to a database of drivers’ licenses [and] pictures,” she said. “Facebook automatically tags our friends in pictures, and the iPhone X [has a facial] unlocking mechanism.”

However, Betke also mentioned a “creepy” application, which was talked about by another speaker, that may become a reality in the future. A shopping assistant in a store could recognize customers’ faces and match them to a database compiled of consumers’ measurements.

Yet facial recognition technology would not be appreciated by the general public if it impedes upon the “right [to be] anonymous,” Betke said. As an example, she referenced how the Russian government used facial recognition technology to, she said, “intimidate” its people and prevent public demonstration against the government.

Vanessa Nurock, a political theory and ethics professor at Université Paris 8, had a philosophical take on facial recognition technology and its potential abuses. Nurock said that this kind of technology used in military drones only checks the identity of the person and not their emotions, which may be a problem.

“People usually don’t consider that moral condition and moral philosophy, [which] may be interesting when you consider facial recognition technology,” she said.

During the panel, Nurock emphasized the importance of thinking about facial recognition technology from beyond a risk versus benefit point-of-view.

“As an emerging technology, it is very difficult to assess beforehand what the consequences will be, and so it is very difficult to have a good picture of the risks and benefits,” Nurock explained in an interview.

Facial recognition technology does more than merely recognizing identities, she said.

“It’s about having a moral and political relationship with someone,” Nurock said. “Facial recognition is not only about assorting identities. It’s also about bodily response, emotional response.”

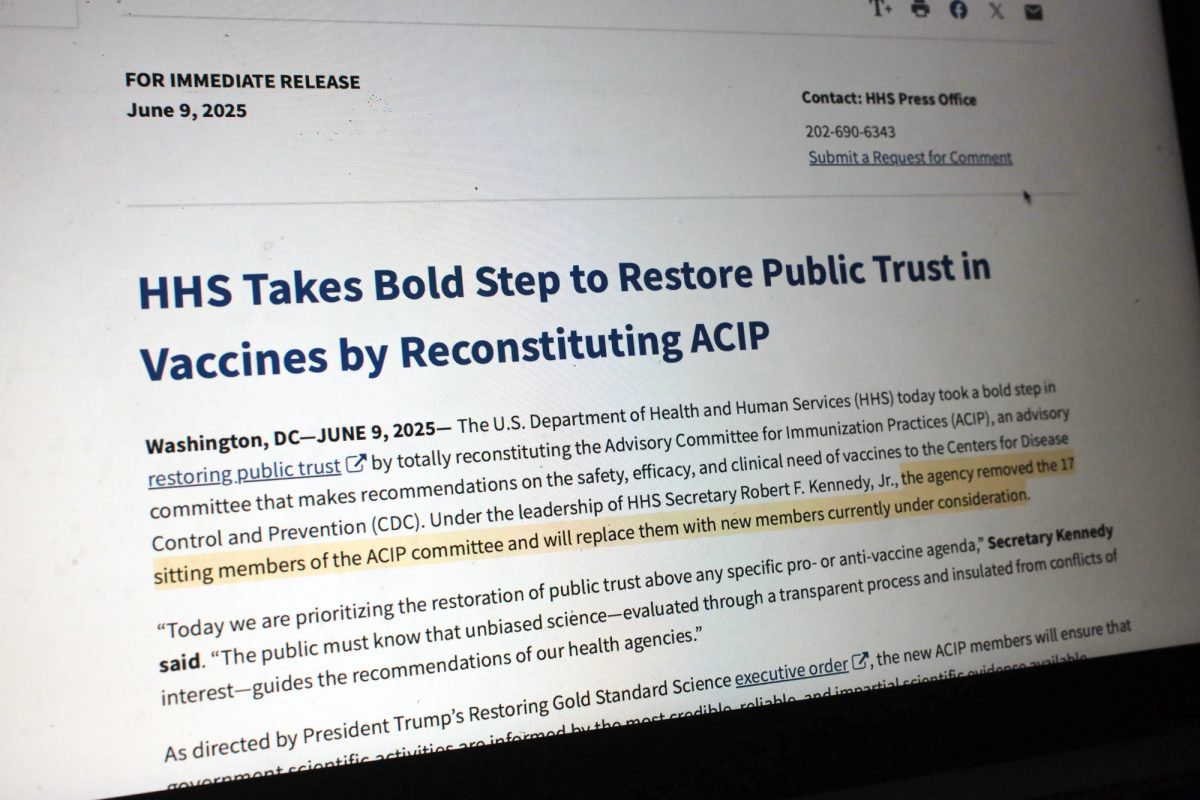

She said she believes there is a need to regulate facial recognition technology.

“This means that right now, we are social experiments,” Nurock said. “Since there’s no laws, people are not aware of that … and not asked whether they want this experimentation.”

Nurock stressed the importance to think not only about the consequences of facial recognition technology but also about its significance.

“It could change the way we behave toward each other,” she said. “It could change our moral and political relationships.”