In less than three years, widespread artificial intelligence has transitioned from a far-off, futuristic fantasy to a tangible, everyday reality.

Since the introduction of ChatGPT by research company OpenAI in November 2022, generative AI models — which are trained to use previously existing information to produce new text and images — have become ubiquitous.

As such, almost every sector of society, from Hollywood to higher education, has been forced to grapple with the unknown implications of the rapidly advancing technology.

The journalism industry has yet to develop consistent policies regarding the use of AI. Proponents believe embracing the technology is the only step forward, while skeptics emphasize the need for strict regulation.

In a field where truth is paramount and plagiarism is prohibited, it’s essential to create clear ethical and professional boundaries to determine how AI is used in the newsroom.

Many news outlets, including The New York Times, have already begun to use AI to generate headline drafts and translate articles. Advocates argue this usage provides reporters with time to focus their energy and attention on important investigative pieces rather than menial tasks.

However, the quality of AI-generated writing has proven to be inconsistent at best. At times, it’s been actively damaging to both the subjects of the stories and the reputations of the outlets that have published them.

In September 2023, MSN published an obituary of former NBA player Brandon Hunter with a bafflingly disrespectful headline: “Brandon Hunter useless at 42.” Although Microsoft never confirmed or denied the use of AI in its construction, the now-deleted obituary’s prose was littered with confusing word choice, signaling a distinct lack of human oversight.

Generative AI often passes off false information as true — a phenomena that has become commonly known as “hallucinations.” Even more harmful, these AI chatbots can blend fact and fiction within the same sentence, making it difficult to determine the accuracy of their claims.

Journalists who turn to chatbots for help with background research to cite in their published work should be aware of possible misattribution. A 2024 Nature study found models like ChatGPT made errors 30% to 90% of the time when making scientific references.

A variety of non-generative AI tools have also been integrated into newsrooms. Programs such as Otter.ai and Descript can save time by transcribing recordings of long interviews. Others provide quick summaries of public meetings, scrape and clean data or track website updates.

These tools exemplify what artificial intelligence does best — assisting with repetitive and monotonous tasks without aiding reporting and writing.

But, even seemingly innocuous uses of generative AI tools — like generating interview questions, paraphrasing quotes or organizing lengthy notes into a more digestible format — are not as harmless as they seem.

Large language models like ChatGPT use the transformer model, which builds connections by tracing patterns between different concepts and makes predictions based on context. Pre-existing stereotypes, discrimination and biases have been proven to be deeply embedded in AI algorithms, stemming the data used to train these models.

A research paper published by Nature in 2024 found although overt racial bias in generative AI has subsided, covert racism remained prevalent in large language models. The researchers found that the models are more likely to suggest that speakers of African American English “be assigned less-prestigious jobs, be convicted of crimes and be sentenced to death.”

The Associated Press Stylebook, a stylistic guide for thousands of newspapers around the globe, has consistently updated its guidelines to account for societal changes in conversations about race, gender and disability, among other topics. Relying on biased models as a resource seems counterproductive in a field that strives to achieve objectivity, inclusivity and fairness.

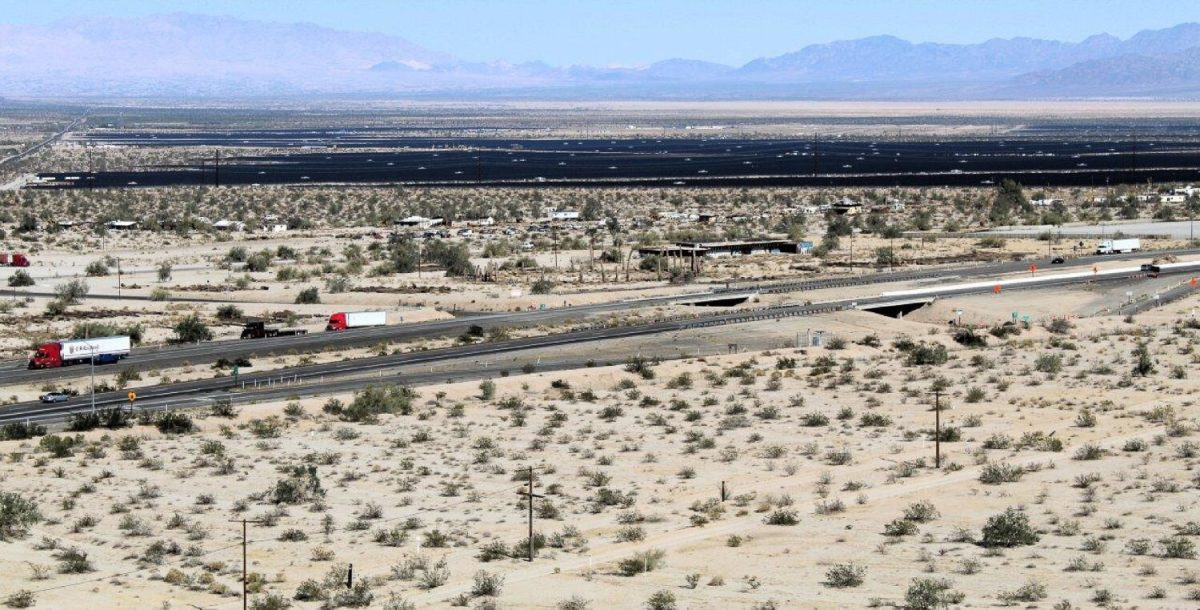

In an age of growing climate change concerns, the detrimental environmental impacts of AI use should also ring alarm bells. The amount of computational power needed for generative AI to function creates a significant amount of both carbon dioxide emissions and water waste, which is used to cool the hardware that trains generative models.

A significant amount of human oversight is still required when integrating AI and journalism — whether to make copy edits, fact-check false information or recognize problematic biases.

A post on X from writer Joanna Maciejewska said it best: “You know what the biggest problem with pushing all-things-AI is? Wrong direction. I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes.”

Ultimately, generative AI will never be able to replace the uniquely human skills that characterize good reporting.

ChatGPT can’t interpret the nuance of a fleeting facial expression or a subtle vocal inflection. It can’t wait outside the mayor’s office for an early-morning interview or embed itself into the life of a profile subject. It can’t interview disaster survivors with empathy and understanding or hold world leaders accountable with skepticism and perseverance.

Focusing on maximizing efficiency and cutting costs by employing artificial intelligence over real reporters will only harm the news industry in the long run. We must take action to protect both journalists as individuals and journalism as societal necessity.

AI isn’t going away — but that doesn’t mean we should blindly accept its encroachment into areas that are best left for human hands.

This Editorial was written by Opinion Co-Editor Ruby Voge.